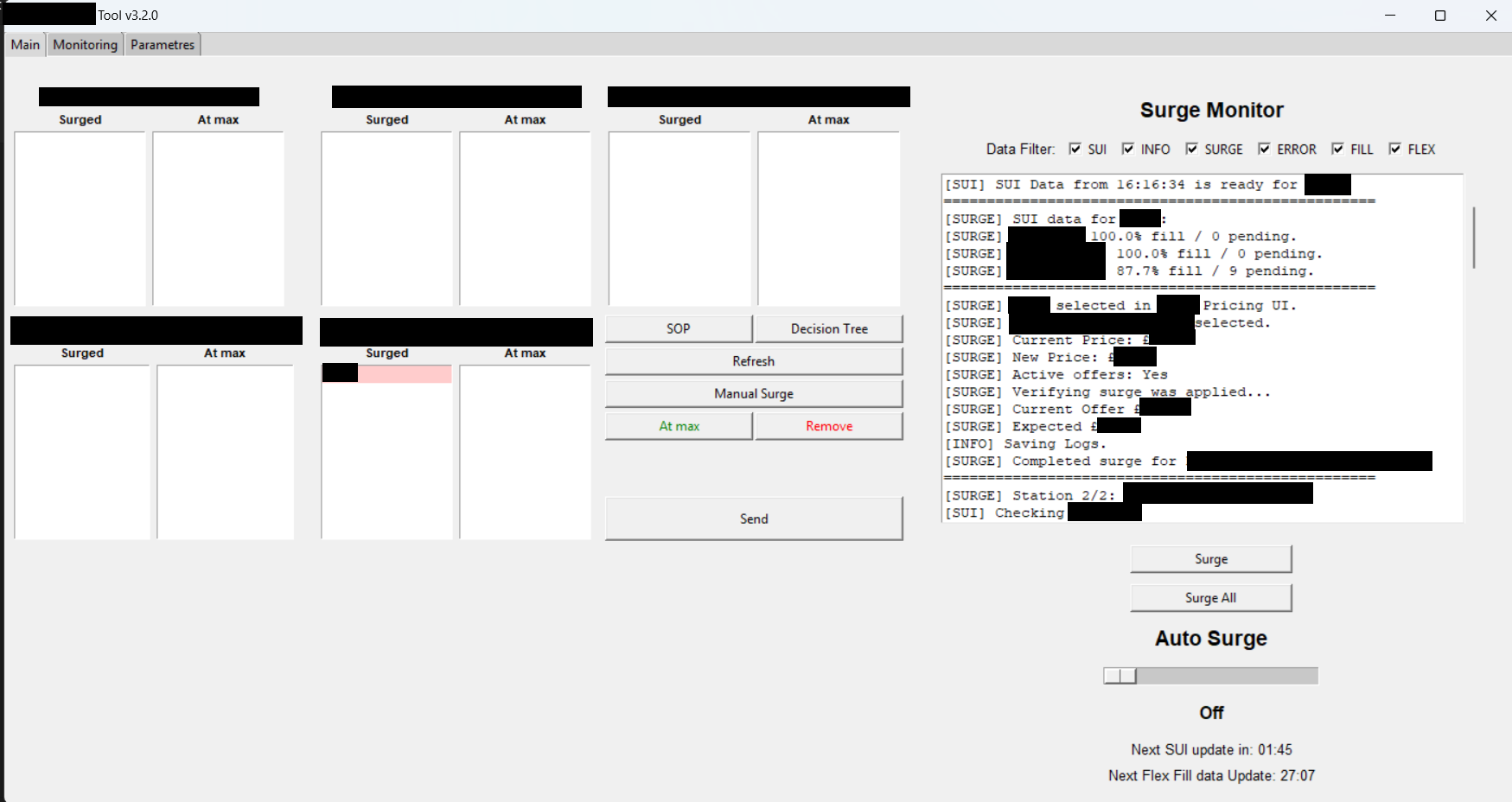

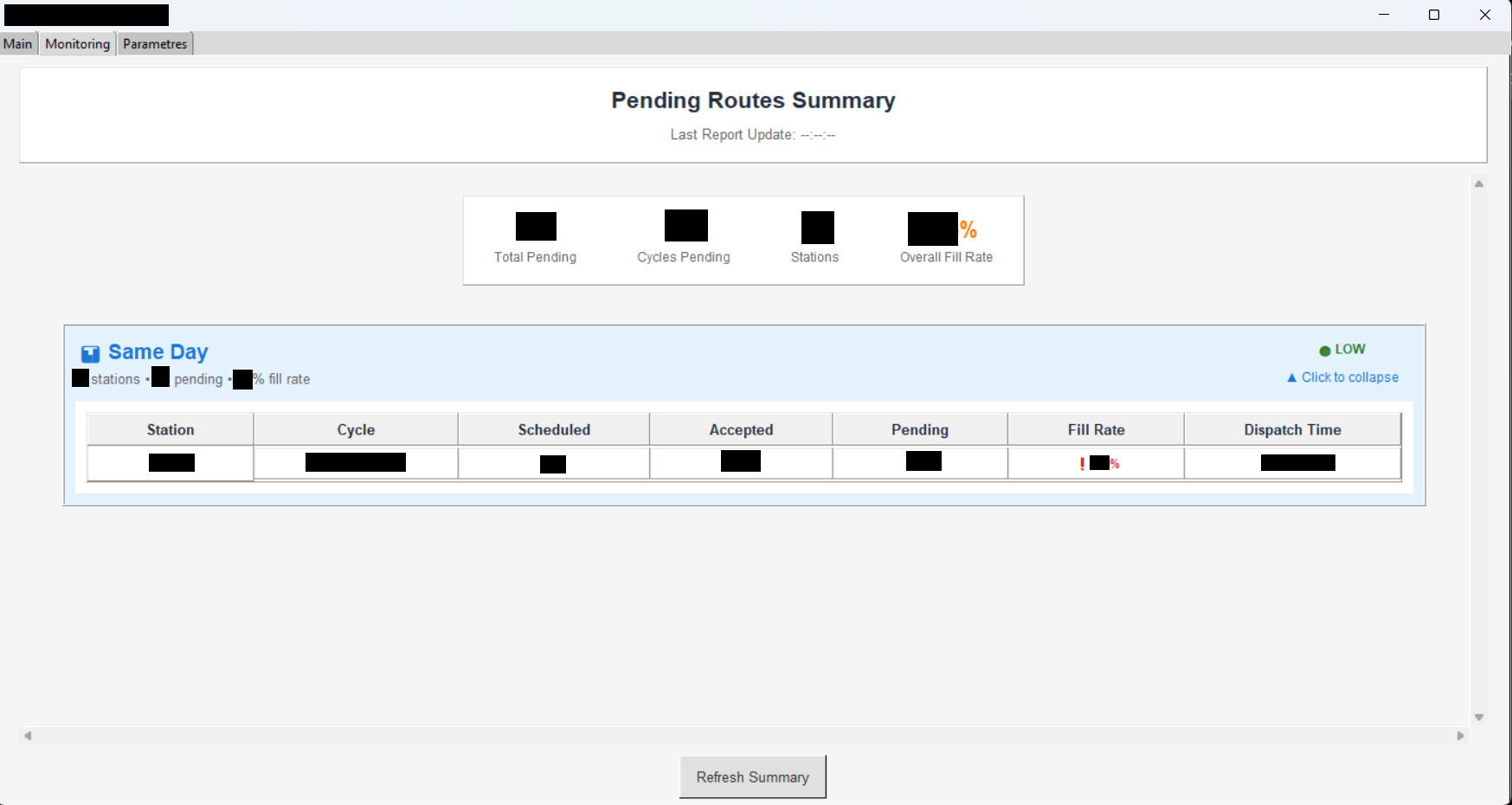

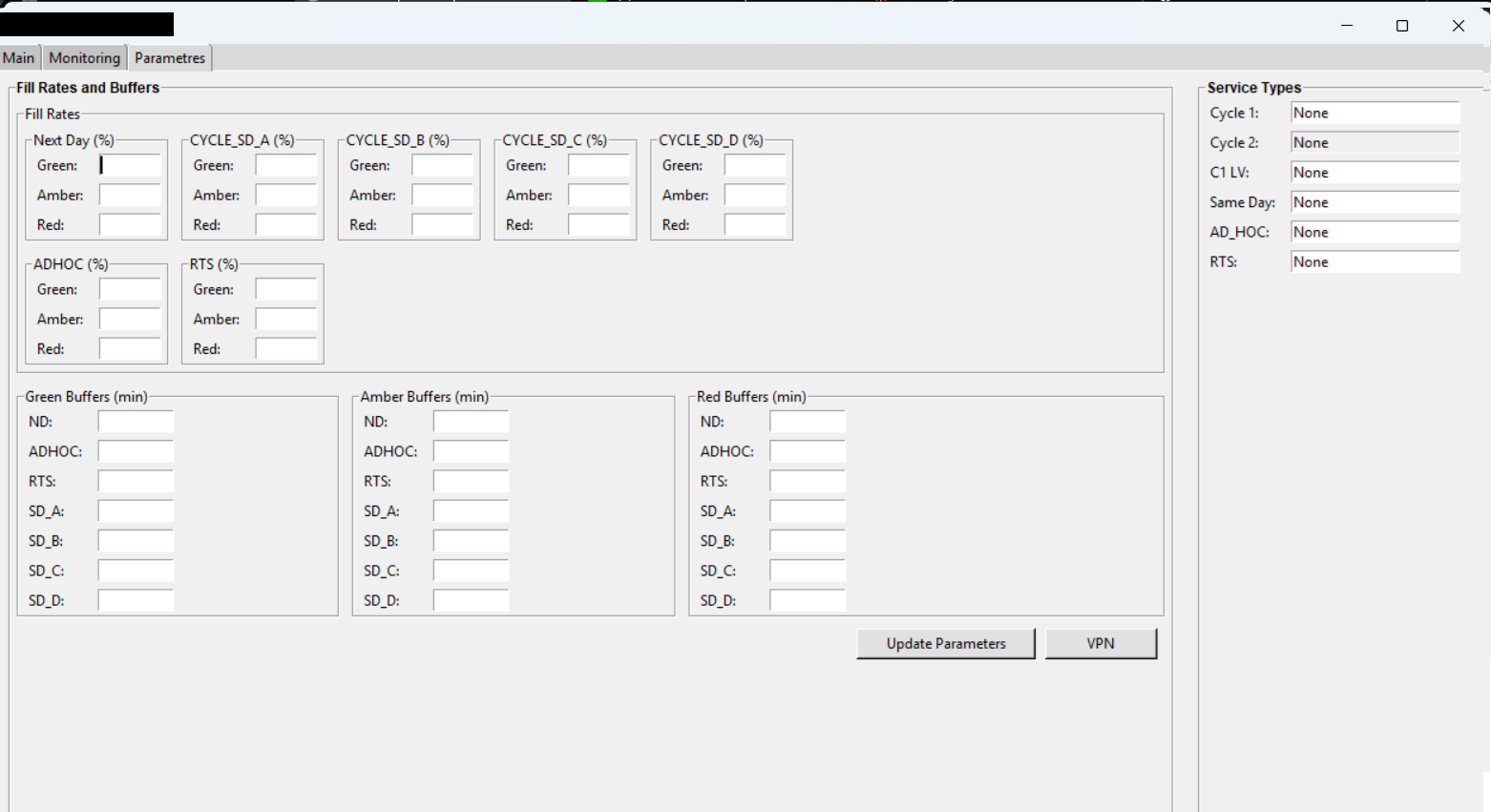

Tool Evolution (Pre-Cloud)

Historical GUI from manual/semi-automated phases—now fully cloud-based with no interface required

Note: First version interface, no longer in active use. For demonstration purposes only.

Note: First version interface, no longer in active use. For demonstration purposes only.

Note: First version interface, no longer in active use. For demonstration purposes only.

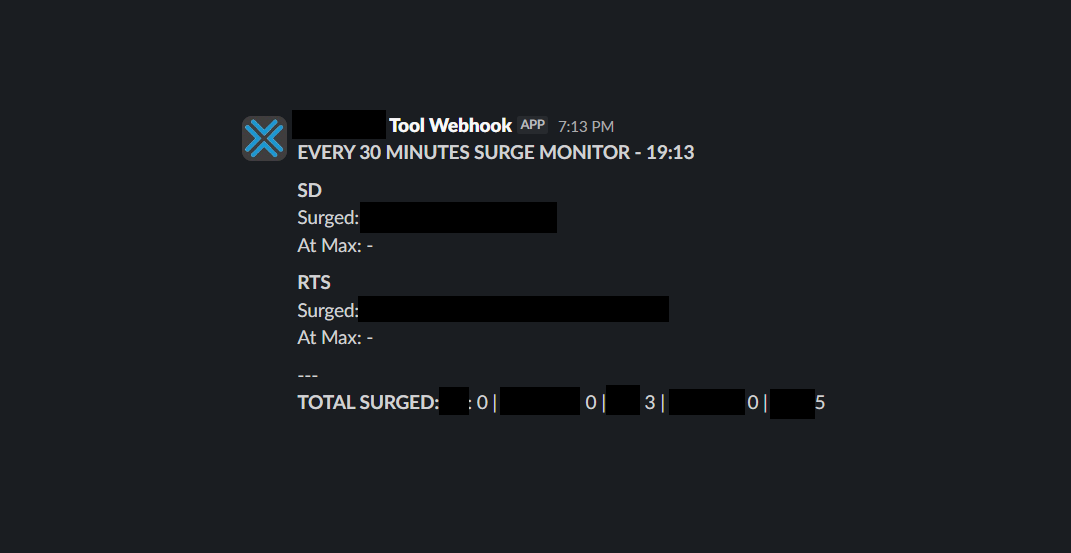

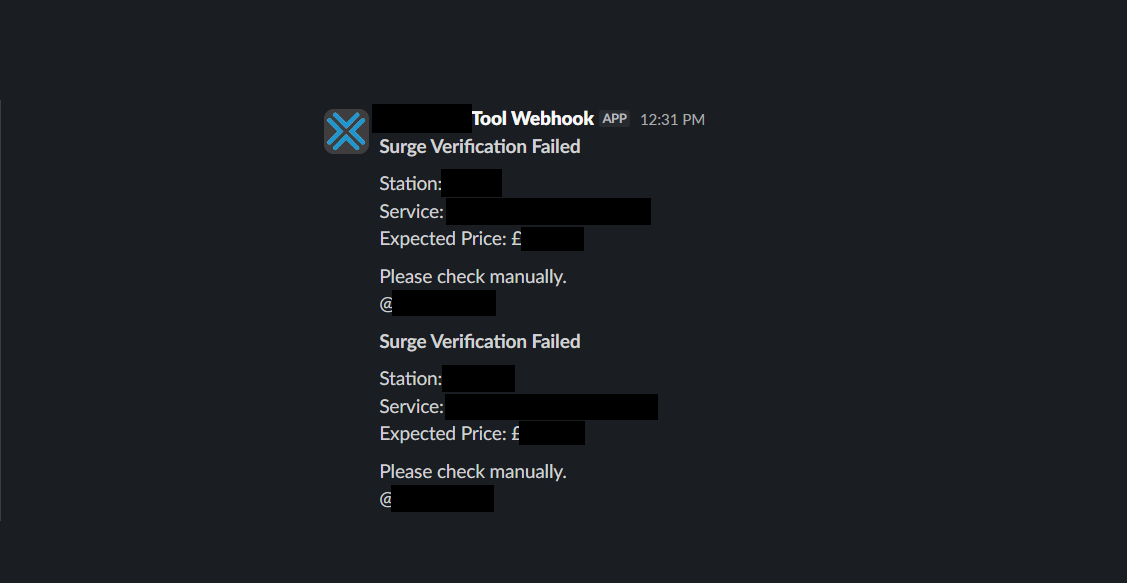

Stakeholder Alerts & Guardrails

Real-time Slack webhooks for successful surges and failure contingencies

Note: First version interface, no longer in active use. For demonstration purposes only.

Note: First version interface, no longer in active use. For demonstration purposes only.

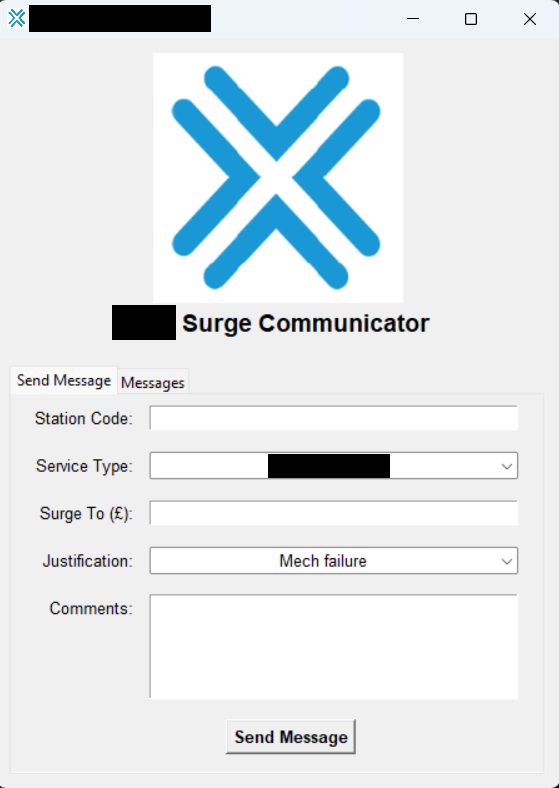

SLA Reduction Through Direct Request Queue

Dedicated tool enabling departments to submit surge requests directly to processing queue—reducing SLA misses by 10%

Note: First version interface, no longer in active use. For demonstration purposes only.

The Problem

Once the Fill Rate Optimizer automation started in 2023 Q1, a new bottleneck emerged: other departments (capacity planning, station operations) still needed to manually request price increases for specific scenarios. These manual requests created SLA misses and delayed critical pricing decisions.

The Solution

Built a standalone communicator tool allowing departments to send surge requests directly to a processing queue. Requests were automatically validated, queued, and processed without manual intervention from the Fill Rate Optimizer operator—cutting SLA response time and reducing SLA misses by 10% on average.

Cloud Integration (2024 Q2)

During Phase 3 cloud migration, the communicator tool was fully integrated into the cloud infrastructure. Requests now flow directly via back-end API to the cloud processing queue—maintaining the SLA improvement while eliminating the separate desktop application entirely.